What would an LLM OS look like?

November 29, 2023Andrej Karpathy's YouTube channel is fantasic. He just published an Intro to Large Language Models video which is a great overview of the subject. In this video, he presents a concept of an "LLM OS", which hasn't gotten enough discussion.

I don't want to speak for Andrej, but his ideas are very clearly interpreted in the context of ChatGPT and ways to improve its functionality (as he currently works at OpenAI). But there is another interesting way to look at it, outside of ChatGPT.

Large Language Models can do a lot of things, but a prompt-based interface limits their usefulness. We are in the dark ages of manually prompting LLMs. The future will be agentic.

agentic: individuals or groups who have the ability to take initiative, make decisions, and exert control over their own actions and outcomes

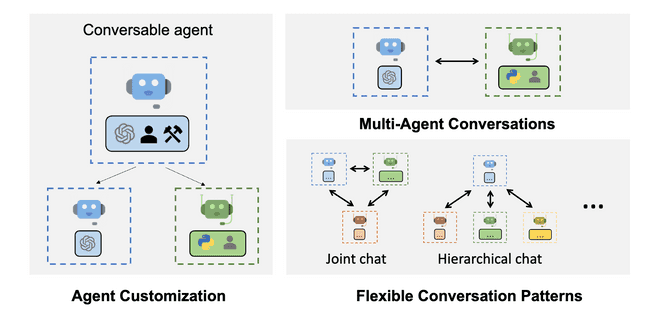

Through ReAct and similar prompting methods, agentic reasoning behavior is possible. There is a lot of research happening around this, but like Autogen most of it is focused on a chat interface.

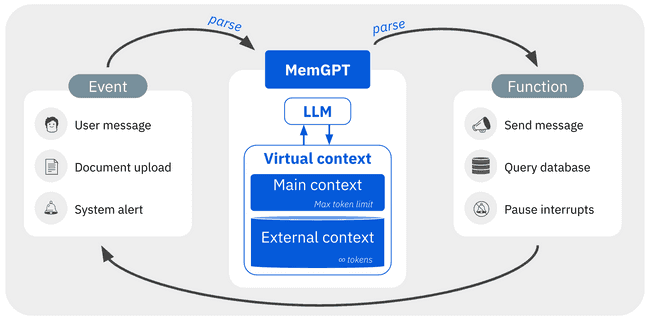

MemGPT is another important step: it begins to abstract away from the chat interface by treating user input as an event. However, the end goal of the system is to increase the quality of conversational agents (by improving the context window by managing memory).

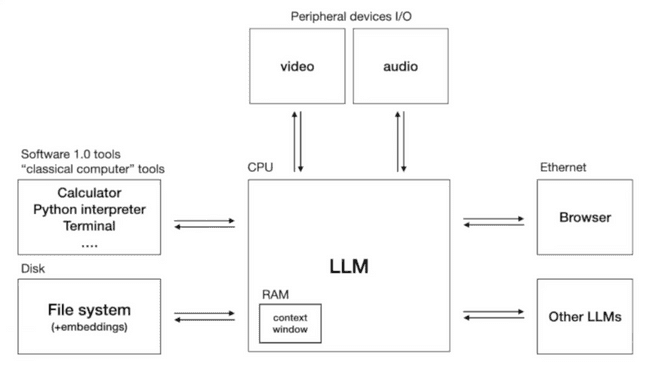

Looking at Andrej's concept of an LLM OS, we can see it is broader in scope. It could be applied as a modular architecture for agentic behavior. And in this architecture, user conversations and multi-agent systems are just a small part of the picture.

The files are inside the computer

Chat was a great introductory interface for LLMs, but it's only one UX paradigm that can be applied, and quite low bandwidth. There is a lot of potential for LLMs to be used in other ways, and I think that's what Karpathy is dreaming about with his LLM OS concept.

Computers are tools for humans to interact with information, and current mobile and desktop OSes are the best way we've found do that as a species. But they depend on slow user input to be manipulated. In the same way ChatGPT has made knowledge workers more productive, integrating LLMs into the OS could multiply the productivity of every user, by making the computer itself more useful.

Steve Jobs famously said that computers are like a bicycle for the mind. Autonomous semi-intelligent agents could turn computers into a spaceship for the mind.

LLMs on the edge

As anyone who has used the GPT API could tell you, it can be slow and expensive af. For this "OS" to work, it would need to be fast and cheap. This means running the model on the edge, and not in the cloud.

As much as corporations would like everything to be rented in the cloud, we each have very capable devices in our pockets and on our desks. These devices have the keys to our digital castle, and using LLMs locally would be a great way to keep it that way.

Apple has been pushing the idea of "on-device" machine learning for a while, and it may pay off in the long run. Not only is their "neural engine" compatible with transformers, local inference is very possible without it. Because of the unified memory architecture, the GPU can access the CPU's RAM, and so high end consumer GPUs aren't necessary. This allows Macs with 32GB of RAM to run 70B models. We can expect Moore's Law and derivatives to continue.

The LLM "Kernel"

Running a tool-enabled model as a sidecar service would be a great start. Imagine the common use case of writing a small Python tool with ChatGPT. Instead of copy/pasting the code from a chat window, the sidecar process could instead create a new project in VS Code, open it for you, save the file and run it.

This seems to be where Microsoft is headed with Copilot, but it's still chat-based. The true magic here would be to allow the LLM to interact with the OS directly, and autonomously. User chat is just one (very important) input to the functionality of the system. For this reason, it's valuable to think of the LLM as a kernel, and not just a sidecar service.

The kernel is a computer program at the core of a computer's operating system and generally has complete control over everything in the system.

If the LLM were available globally as a system API, we could allow userland programs to register functions for the LLM to use. This would allow using the existing app stores to distribute new functionality, while users continue to use their favorite apps. The UX transition would be less jarring than hoping everyone will want to write chat messages in a web browser all day.

With access to all of the user's information, including session tokens, RAG will become mind-bogglingly useful. Imagine being able to ask your computer "what was that article I read last week about LLMs?" and it could find it for you without your browsing history leaving your machine.

Time rhymes

Bonzi Buddy was a desktop assistant from the early 2000s. It was a cute little purple gorilla that would help you with your computer. It was a fun idea, but it was too early.

Not only was the wizard behind the curtain, but incentives were different: back then, the only monetization path was to get users to click on ads. Today, users are much more willing to pay for software. Additionally, device manufacturers are competing on software features to sell hardware. And the wizard is now a transformer model.

Incentives are aligned for a new generation of not only desktop assistants, but improved operating systems - and the technology has appeared at the right time.

Pondering the orb

What might the future look like? There are obvious use caseses of LLMs - but here are some non-obvious ways OS-level LLMs could be used:

- Privacy-Enhanced Search: Perform personalized searches like "What article did I read about dachshunds last week?" using local browsing history, maintaining data privacy.

- Local File Management: Assist with content in local files, e.g., "Insert sales figures from last Tuesday's Excel file," using local data access.

- Intuitive Troubleshooting: When a user encounters a system error or technical issue, the LLM can provide a plain-language explanation and step-by-step troubleshooting guidance, tailored to the user's technical expertise level. This could be especially helpful for my mom.

- Emotional Tone Detection: While composing emails or messages, the LLM could analyze the text's emotional tone and suggest modifications to align with the user's intended sentiment. I don't want my draft text to be sent to the cloud for this.

- Health-Related Adjustments: If a user shows an elevated heart rate after a challenging meeting, the LLM could suggest taking a break or automatically dim the screen. It could also reword follow-up emails to be more diplomatic if the user is feeling stressed. Health data should stay local.

- Smart Local Reminders: Set reminders and manage calendar events based on content from emails or messages, all processed locally for security. "What am I forgetting to follow up on?"

- Advanced Voice Commands: Control devices with complex voice commands, e.g., "Open and start slideshow from slide 10 of yesterday’s presentation," using local data processing.

- Personalized Recommendations: For example, if a user is planning a trip, the LLM could suggest relevant travel itineraries or packing lists stored on the user’s device, based on their past travel planning documents and preferences. Knowing the user is afraid of flying from past messages, it could evaluate routes and suggest a train trip instead.

- Complex Automation: "Get me Taylor Swift tickets somewhere I can drive." (local calendar + location access) "Let me know when the food my dog likes is back in stock." (local email access)

There has been speculation on Twitter that OpenAI has something big to release soon. I don't think this is it - but I would be surprised if they aren't playing around with something like this.

In the same way mobile changed the way we interact with computers, LLMs may change the way we interact with information. Only time will tell, and at the rate things are moving, it may not be long.

The LLM OS is a fun concept, and if you have any ideas about it, I'd love to hear them!